Introduction

Dynamic programming is a technique to optimize a recursive problem's time complexity from exponential to polynomial time. This technique stores the results of the sub-problems generated during the time of recursion and uses these results if needed again. By doing this the time complexity comes down to a great extent. The main use of dynamic programming is to solve optimization problems. Here, optimization problems mean that when we are trying to find out the minimum or the maximum solution to a problem. Dynamic programming guarantees to find the optimal solution of a problem if the solution exists.

Example

Let's understand this approach through an example.

Consider an example of the Fibonacci series. The following series is the Fibonacci series:

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, …

The numbers in the above series are not randomly calculated. Mathematically, we could write each of the terms using the below formula:

F(n) = F(n-1) + F(n-2),

Here n is the nth term in the series starting from 1.

With the base values F(0) = 0, and F(1) = 1. To calculate the other numbers, we follow the above relationship. For example, F(2) is the sum F(0) and F(1), which is equal to 1.

How can we calculate F(20)?

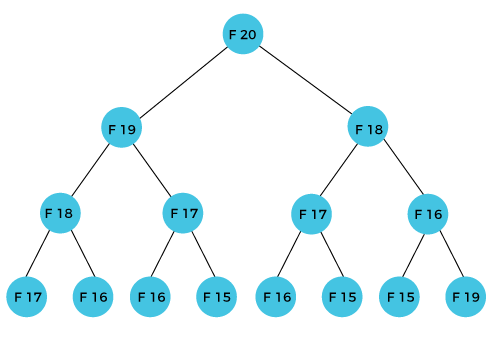

The F(20) term will be calculated using the nth formula of the Fibonacci series. The below figure shows how F(20) is calculated.

As we can observe in the above figure that there is a binary tree that forms. In this tree, you can see that the root node is F(20) which we are trying to find out. to compute F(20) we need to sum F(19) and F(18). Similarly to compute F(19) we need to sum F(17) and F(18). So you may notice that many values are repeated in this tree two or more times. If we solve this problem using brute force or just normal recursion then these values will be calculated every time they appear or need to be calculated to find other values. To solve this issue dynamic programming stores these values the first time they are calculated to save time.

In the above example, if we calculate the F(18) in the right subtree, then it leads to the tremendous usage of resources and decreases the overall performance.

The solution to the above problem is to save the computed results in an array. First, we calculate F(16) and F(17) and save their values in an array. The F(18) is calculated by summing the values of F(17) and F(16), which are already saved in an array. The computed value of F(18) is saved in an array. The value of F(19) is calculated using the sum of F(18), and F(17), and their values are already saved in an array. The computed value of F(19) is stored in an array. The value of F(20) can be calculated by adding the values of F(19) and F(18), and the values of both F(19) and F(18) are stored in an array. The final computed value of F(20) is stored in an array.

How does the dynamic programming approach work?

The following are the steps that the dynamic programming follows:

It breaks down the complex problem into simpler subproblems.

It finds the optimal solution to these sub-problems.

It stores the results of subproblems (memoization). The process of storing the results of subproblems is known as memorization.

It reuses them so that the same sub-problem is calculated more than once.

Finally, calculate the result of the complex problem.

Where to apply

The above five steps are the basic steps for dynamic programming. Dynamic programming is applicable that has properties such as:

Those problems that are having overlapping subproblems and optimal substructures. Here, optimal substructure means that the solution to optimization problems can be obtained by simply combining the optimal solution of all the subproblems.

In the case of dynamic programming, the space complexity would be increased as we are storing the intermediate results, but the time complexity would be decreased.

Approaches of dynamic programming

There are two approaches to dynamic programming:

Top-down approach

Bottom-up approach

Top-down approach (Memoization)

The top-down approach follows the memorization technique, while the bottom-up approach follows the tabulation method. Here memorization is equal to the sum of recursion and caching. Recursion means calling the function itself, while caching means storing the intermediate results.

The basic recursive function for the Fibonacci problem also known as brute force in Java is this:

class Solution{

public int fib(int n){

if (n==0)

return 0;

if(n==1||n==2)

return 1;

return fib(n-2)+fib(n-1);

}

}

Now to apply a Top-down approach to this we need to use memoization, which means we need to store the intermediate results of the recursive function into an array and use them.

We are going to use an array memo[] to store the values and optimize the above code to a polynomial time complexity.

class Solution{

public int fib(int n, int[] memo){

if(memo[n]!=0)

return memo[n];

if (n==0)

return 0;

if(n==1||n==2)

return 1;

memo[n]=fib(n-2)+fib(n-1);

return memo[n];

}

}

As you can see in the above code we have implemented Memoization. the nth term in the series is only calculated once and then stored in the memo[] array to be of use later in the program. The function first checks if the value of the nth term is already present in the array or not, if it is there then that call ends returning the stored value.

The main advantage of this approach is that it is easy to implement and frame the algorithm.

Bottom-Up approach (Tabulation method)

The bottom-up approach is also one of the techniques which can be used to implement dynamic programming. It uses the tabulation technique to implement the dynamic programming approach. It solves the same kind of problems but it removes the recursion. If we remove the recursion, there is no stack overflow issue and no overhead of the recursive functions. In this tabulation technique, we solve the problems and store the results in a matrix.

The bottom-up is the approach used to avoid the recursion, thus saving memory space. The bottom-up is an algorithm that starts from the beginning, whereas the recursive algorithm starts from the end and works backward. In the bottom-up approach, we start from the base case to find the answer for the end. As we know, the base cases in the Fibonacci series are 0 and 1. Since the bottom approach starts from the base cases, so we will start from 0 and 1.

Key points

We solve all the smaller sub-problems that will be needed to solve the larger sub-problems then move to the larger problems using smaller sub-problems.

We use for loop to iterate over the sub-problems.

The bottom-up approach is also known as the tabulation or table-filling method.

Example

We will be understanding this approach by the same problem to get you a good idea of the difference and the implementation.

In the bottom-up approach, we use the for loop and start from the base cases to find the answer to larger cases. it is the same idea in which we first solve the smaller sub-problems to get the solution to a larger problem.

Keep this formula in mind while looking at the below explanation.

F(n) = F(n-1) + F(n-2)

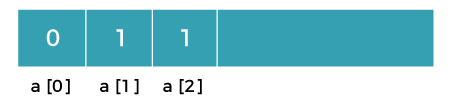

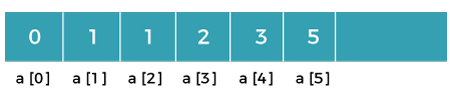

Suppose we have an array that has 0 and 1 values at a[0] and a[1] positions, respectively shown below:

Since the bottom-up approach starts from the lower values, the values at a[0] and a[1] are added to find the value of a[2] shown below:

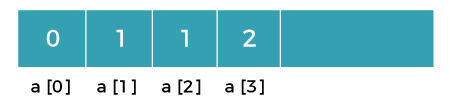

The value of a[3] will be calculated by adding a[1] and a[2], and it becomes 2 shown as below:

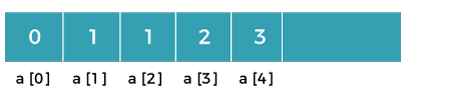

The value of a[4] will be calculated by adding a[2] and a[3], and it becomes 3 shown as below:

The value of a[5] will be calculated by adding the values of a[4] and a[3], and it becomes 5 shown as below:

Like this, the values of higher cases are computed by adding the previous two elements in the array. Let's look at its code implementation.

class Solution{

public int fib(int n){

int[] a= new int[n+1];

a[0]=0;

a[1]=1;

for(int i=2;i<=n;i++){

a[i]=a[i-1]+a[i-2];

}

return a[n];

}

}

As you can see in the above code we output a[n] as the answer which means that the nth term in the array is the nth term in the sequence. This approach is the tabulation approach in dynamic programming and it is used very widely. It is a little hard for beginners to understand/implement but with practice, you can easily grab the concept.

Some Famous Dynamic Programming Problems

1. Identifying the number of ways to cover a distance

You can find this problem here:

Some recursive functions are invoked three times in the recursion technique, indicating the overlapping subproblem characteristic required to calculate issues that use the dynamic programming methodology.

Using the top-down technique, just store the value in a HashMap while retaining the recursive structure, then return the value store without calculating each time the function is invoked. Utilize an extra space of dimension n when employing the bottom-up method and compute the values of states beginning with 1, 2,…, n, i.e., compute the values of I i+1 and i+2 and then apply them to determine the value of i+3.

2. Identifying the optimal strategy of a game

You can find this problem here:

To identify the optimal strategy of a game, let’s consider the “coins in a line” game. The memoization technique is used to compute the maximum value of coins taken by player A for coins numbered h to k, assuming player B plays optimally (Mh,k). To find out each player’s strategy, assign values to the coin they pick and the value of the opponent’s coin. After computation, the optimal design for the game is determined by observing the Mh,k value for both players if player A chooses coin h or k.

3. Counting the number of possible outcomes of a particular die roll

You can find this problem here:

With an integer M, the aim is to determine the number of approaches to obtain the sum M by tossing dice repeatedly. The partial recursion tree, where M=8, provides overlapping subproblems when using the recursion method. By using dynamic programming, one can optimize the recursive method. One can use an array to store values after computation for reuse. In this way, the algorithm takes significantly less time to run with time complexity: O(t\n**m), with t being the number of faces, n being the number of dice, and m being the given sum.

Conclusion

Dynamic Programming is one of the advanced skills in programming. One must first understand the basics of programming before jumping to this topic. But this is a very important technique in the problem-solving area. This is a very frequent interview question in most good-paying companies so you must have a good grasp on this concept to solve the question.

Thank you for reading this article.